AI Risk Mitigation

How to manage difficult conversations

AI Risk Mitigation

AI risk mitigation is the process of identifying, assessing, and reducing the potential negative impacts of AI technologies. It's a part of the broader field of AI governance, which establishes the rules and standards for AI research, development, and application.

In What We Owe the Future, William MacAskill cites three reasons that “We are often in a position of deep uncertainty with respect to the future…” (2022, p. 237)

“There are strong considerations on both sides” – the example he provides here is whether to speed up or slow down AI development

“as well as weighing competing considerations we are aware of, we also need to try to take into account the considerations we haven’t yet thought of”

“even in those cases where we know a particular outcome is good to bring about, it can be very difficult to make that happen in a predictable way”

With all of this in mind, this short essay will make an argument for the importance of balancing several perspectives on AI risk mitigation.

This chart by Ryuichi Maruyama demonstrates that perspectives on AI risk diverge in two key ways:

- The timescale of what AI harms to focus on

- The degree of regulation AI harms warrant

…thus creating three “camps”:

#1 AGI risk is real

#2 AI harm is here and now

#3 less regulation for steady progress

I personally find myself just a bit upwards of the AI ethics perspective, as indicated by my annotation.

In this essay I will explain my reasoning for that position and make a direct case for the value of a balanced approach between camps #1 and #2 to inform humanity’s AI risk mitigation efforts.

My position on each of the three camps is as follows:

Even more important in my opinion — however — is that experts can’t agree on how to act.

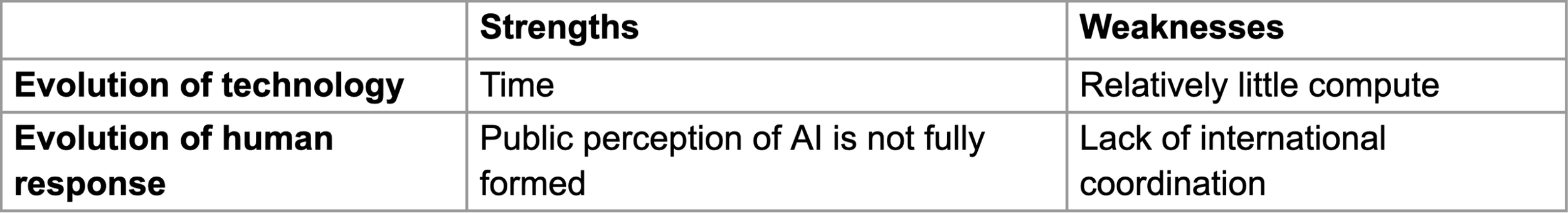

So what would meaningful action look like? Let’s first carefully consider the strengths and weaknesses of our current position.

-

[Forthcoming]

-

[Forthcoming]

-

[Forthcoming]

Let’s unpack these strengths and weaknesses, starting with the evolution of technology. Time may sound obvious but what’s important is how we use that time from an AI risk mitigation standpoint. Are we convincing the American government to develop bipartisan policies for a range of AGI scenarios? Are we cooling international tensions with historically hostile states such as China in favor of joint safety research? Are we raising capital for alignment research in the event we pass an intelligence explosion curve “knee” so we can immediately take advantage of our heightened access to compute and automated AI research?

[More forthcoming]

Source: Leopold Aschenbrenner

Source: Ryuichi Maruyama